Our latest class poll produced the following results: 70 percent who responded got it wrong (see our class poll’s outcome in the chart below). Given that we just began to discuss this relationship and its connection to hypothesis testing in class, allow me to further develop the poll’s central issue in modest detail. We’ll continue this discussion in class. I hope you’re taking notes.

Where does the CR begin? Enter the concepts of alpha (the level of significance) and the critical value (a value that begins the CR at alpha). When alpha is set (by convention) at .05, the critical region marks off the outer 5 percent of a sampling distribution (2.5% in each tail for a non-directional hypothesis, as shown in the diagram above, and 5% in one tail for a directional hypothesis).

Where do you find a critical value for your statistic? A sampling distribution is, in fact, a table of critical values. All you need to know to find the critical value is knowledge of (1) your sample’s degrees of freedom (which will vary by each statistic; for example, it is “n-1” for t), (2) the level of significance or alpha, and (3) the type of hypothesis; whether it’s directional (one-tailed) or non-directional (two-tailed). With this information and access to a statistic’s sampling distribution, you will be able to find the critical value at alpha.

Finally, with knowledge of your one sample statistical outcome (from the sample distribution) and the critical value of that statistic at alpha (from the sampling distribution), you are ready to plug these values into the decision rule presented in the diagram below to see if you can generalize the hypothesized results to the overall population from which the sample was drawn (to the theoretical distribution):

Correct Answer to Our Class Poll: A Sampling Distribution (#4)

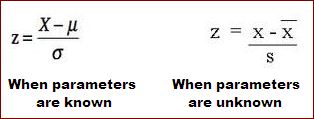

Let’s start off with a common activity among researchers: We want to test a hypothesis using a specific statistic (e.g., z, t, Chi-Square, etc.) calculated from a random sample drawn from a population of interest. That is, we want to test a hypothesis based on a statistic we calculate from raw scores that comprise our sample data (our sample distribution). Once we use a standardized procedure to calculate the statistical outcome based on sample data (for example, t=2.13), we have the following question to address:

Q: How do we know if this singular outcome is statistically significant so we can reject the null hypothesis? That is, how do we know whether the resulting outcome (t=2.13) occurred by chance (an outcome that is not significant) or is due to something other than chance (where we infer that a statistically significant outcome has occurred)? This question is at the heart of inferential analysis. Why? If we meet the criteria needed to reject our null hypothesis, we can generalize our sample results to the population from which the sample was drawn, i.e., to the theoretical distribution of all raw scores in a population.

We answer this important question in three parts.

First, we need a model of all possible statistical outcomes of that specific statistical test and their associated probabilities of occurrence. This model is called a sampling distribution of a given statistic. Each sampling distribution of a statistic (such as z, t, Chi-Square, etc.) is generally found in the back of your statistics or research methods textbook, so you never have to create a sampling distribution on your own. To reiterate the point, such a model gives us:

Q: How do we know if this singular outcome is statistically significant so we can reject the null hypothesis? That is, how do we know whether the resulting outcome (t=2.13) occurred by chance (an outcome that is not significant) or is due to something other than chance (where we infer that a statistically significant outcome has occurred)? This question is at the heart of inferential analysis. Why? If we meet the criteria needed to reject our null hypothesis, we can generalize our sample results to the population from which the sample was drawn, i.e., to the theoretical distribution of all raw scores in a population.

We answer this important question in three parts.

First, we need a model of all possible statistical outcomes of that specific statistical test and their associated probabilities of occurrence. This model is called a sampling distribution of a given statistic. Each sampling distribution of a statistic (such as z, t, Chi-Square, etc.) is generally found in the back of your statistics or research methods textbook, so you never have to create a sampling distribution on your own. To reiterate the point, such a model gives us:

· all possible outcomes of a given statistic and

· the associated probabilities of occurrence of each statistical outcome

Second, the researcher then compares the statistic that (s)he computed from sample data (sample distribution) to the statistical model that gives the probabilities associated with observing all empirical outcomes (all statistical values) of that statistic. That is, the researcher makes a comparison between (1) the original singular (one-sample) statistical outcome and (2) a statistical model (sampling distribution) that gives the probability of observing all given outcomes of that statistic. In the example above, it would be a comparison between the calculated t statistic (2.13) and the sampling distribution of all potential outcomes of t and their associated probabilities of occurrence. What you actually compare will be discussed shortly.

To visualize this relationship, consider the following diagram which divides a sampling distribution into two regions based on such a comparison. One region is referred to as the critical region (or CR). If your (one sample) statistical outcome falls in this region, you reject the null hypothesis of no difference in favor of your non-directional or directional research hypothesis (see my prior blog for this distinction). The CR is defined as that portion of a sampling distribution that leads to the rejection of the null hypothesis.

To visualize this relationship, consider the following diagram which divides a sampling distribution into two regions based on such a comparison. One region is referred to as the critical region (or CR). If your (one sample) statistical outcome falls in this region, you reject the null hypothesis of no difference in favor of your non-directional or directional research hypothesis (see my prior blog for this distinction). The CR is defined as that portion of a sampling distribution that leads to the rejection of the null hypothesis.

Non-Chance (CR) and Chance Regions of a Sampling Distribution

Where does the CR begin? Enter the concepts of alpha (the level of significance) and the critical value (a value that begins the CR at alpha). When alpha is set (by convention) at .05, the critical region marks off the outer 5 percent of a sampling distribution (2.5% in each tail for a non-directional hypothesis, as shown in the diagram above, and 5% in one tail for a directional hypothesis).

Where do you find a critical value for your statistic? A sampling distribution is, in fact, a table of critical values. All you need to know to find the critical value is knowledge of (1) your sample’s degrees of freedom (which will vary by each statistic; for example, it is “n-1” for t), (2) the level of significance or alpha, and (3) the type of hypothesis; whether it’s directional (one-tailed) or non-directional (two-tailed). With this information and access to a statistic’s sampling distribution, you will be able to find the critical value at alpha.

Finally, with knowledge of your one sample statistical outcome (from the sample distribution) and the critical value of that statistic at alpha (from the sampling distribution), you are ready to plug these values into the decision rule presented in the diagram below to see if you can generalize the hypothesized results to the overall population from which the sample was drawn (to the theoretical distribution):

How to Determine if Your Outcome is Significant:

Accept or Reject the Null Hypothesis

Accept or Reject the Null Hypothesis

The logic associated with this entire discussion can be summarized through the following six steps of hypothesis testing which we reviewed in class:

1. State your research hypothesis

2. State your null hypothesis

3. Set alpha (conventionally set at .05)

4. Identify the critical value of the test statistic at alpha

5. Calculate the test statistic

6. Compare your one sample statistical outcome to the critical value of that statistic at alpha. If the absolute value of the statistic you calculate from sample data meets or exceeds the absolute value of the critical value at alpha, then you will reject the null hypothesis of no difference in favor of your non-directional or directional research hypothesis.

So, in short, a distribution of all potential outcomes of a statistic and their associated probabilities of occurrence is called a sampling distribution. For reasons we just examined, sampling distributions are an essential part of inferential analysis.

I hope this helps.

Professor Ziner

I hope this helps.

Professor Ziner