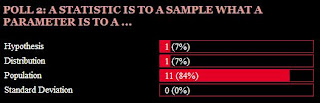

The results of our first class poll (see inset chart below) reveal that most who participated correctly identified inferential analysis to involve two important forms of statistical research. The first is that we

estimate population (N) parameters. Parameters describe populations of interest and include the mean of the population (

µ) and its standard deviation (

σ). Since researchers do not always know these characteristics of a population under study, they must be estimated from sample data. In the case of

µ, we calculate confidence intervals (usually based on 95% or

99% confidence) to assess the range within which a true population parameter will fall. If you provided a single estimate of 190 lbs. for my weight, for example, how confident would you be that it’s correct? If you then widened your point estimate to an interval estimate of 180-200 lbs., wouldn’t you be more confident that my true weight is in this range? How about 160-220 lbs.? The point is that

the wider the interval, the greater our confidence that the true µ falls within that interval.

Second, inferential analysis also involves

hypothesis testing — a building block of research. A hypothesis is a statement of the relationship between two (or more) variables where one is seen as the

independent variable (symbolized as X or the “cause”) and the other is the

dependent variable (symbolized as Y or the “effect”). What is the effect of cigarette smoking (X) on rates of cancer (Y)? Hours studying statistics (X) on course grade performance (Y)? Size of a company (X) and employee job satisfaction (Y)? When we are interested in determining the statistical significance of these relationships by testing the effects of one variable on the other, we test hypotheses. Note that we cannot determine

causality through hypothesis testing alone. Causality involves demonstrating statistical covariation

and determining time-order (via variable manipulation procedures)

and establishing statistical control (removing the possibility that some third factor can explain away X and Y’s significant covariation). Of the three elements of causality (covariation, manipulation and control), hypothesis testing provides the means to demonstrate statistical covariation of a bivariate (two-variable or "X on Y") relationship – an important first step in understanding and explaining the world around us.

The last two choices, “Generalizing to Samples” and “Heavy Drinking on Weekends,” are simply wrong. While conducting inferential analysis may

lead some people to drink heavily on weekends (an interesting study in its own right), the process does not involve such behavior

any day of the week.

I encourage you to participate in future class polls found at Broken Pencils! I will always analyze the results when the polling period expires and examine the answers to the questions I pose.

NOTE: Percentages do not add up to 100. This poll gave students the option of choosing more than one response. Future polls will be limited to one choice.